Every security expert will tell you that running agents in a sandbox is a best practice. It’s also completely useless.

If you’re not familiar, a sandbox is an isolated and secure environment in which you can run code. No outbound network connections. No ability to access files on the main operating system. Everything runs completely separate which means it’s safe. It’s why it’s used so often in malware analysis.

Running agents in a sandbox doesn’t make them secure AND useful.

Let’s go on a journey of discovery together. We’ll use Claude Code as an example. I love them, but wow…you’ll see why in a second.

First, the good news. In October 2025, Anthropic announced a new sandbox feature for Claude Code, and the security nerds did rejoice. “Security,” they shouted proudly from the mountain tops. Too much? Yeah…erhmm…onto how it works.

The sandbox isolates bash commands from your filesystem and network. This is a positive because bash commands can do dangerous things, like execute anything on your system. I’ll spare you the most commonly cited example of deleting entire directories, but know that it happens.

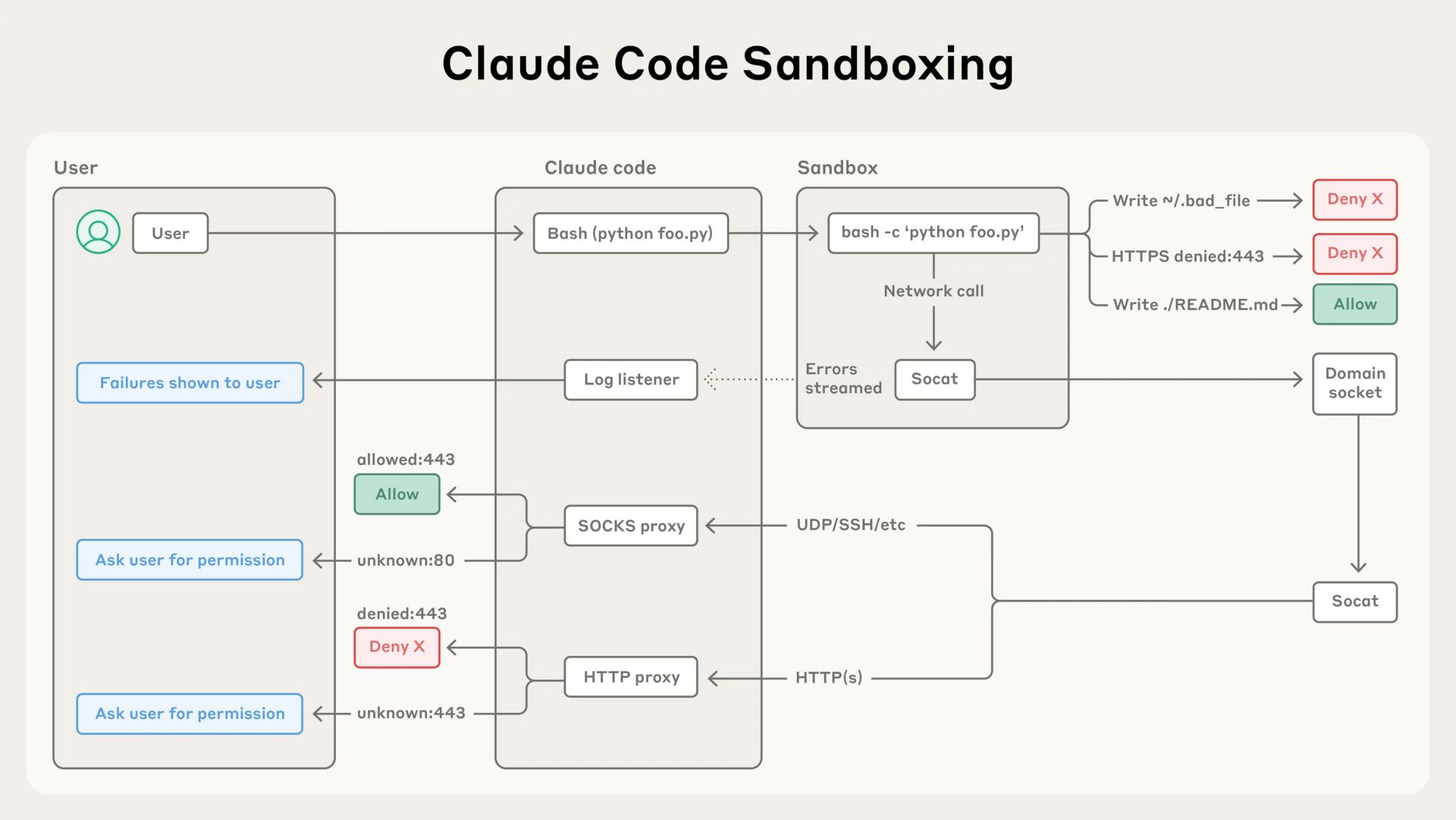

Anthropic included a nice visual showing how it protects you. Feels good.

The sandboxing feature allows for two core features:

File system isolation: This restricts file system access to specific directories. In Claude Code, this means write behavior to the current working directory and subdirectories. Our first caveat. It still has read access to the entire computer, unless you explicitly deny it access to certain directories.

Network isolation: All network traffic is funneled through a proxy server running outside of the sandbox. Requests to new domains will trigger user confirmation if the user hasn’t seen the domain before. Users can configure domains to only those they have approved.

Sounds great! But guess what?

It’s disabled by default.

Well, that doesn’t seem very helpful. Surely, Anthropic put security at the forefront. Let’s check their blog for more info.

Constantly clicking "approve" slows down development cycles and can lead to ‘approval fatigue’, where users might not pay close attention to what they're approving, and in turn making development less safe.

Oh…so this is really just an efficiency play. Well, okay, that’s okay. The priority is enabling users. So those concerned with security at least have the option to run things in a sandbox and be secure, right?

Per Anthropic’s documentation, Claude Code has an “intentional escape hatch” that can “allow commands to run outside the sandbox when necessary.” When is it determined when it is necessary? I’m so glad you asked. When a command fails due to sandbox restrictions…

Erhmm, so things are sandboxed until it’s inconvenient for the sandbox to exist…

Let’s break this down. The sandbox, which users have to manually enable, can then bypass itself when it hits a sandbox wall, and if it does, it can then run the command outside of the sandbox, which may prompt the user (default behavior unless modified in permissions), which Anthropic already said “users might not pay close attention” to.

Said differently, an agent sandbox is a public beach.

Let’s remember something. Agents are only as useful as the access you give them. I disabled security settings with CoWork because my agent wasn’t useful when it couldn’t connect to anything. We neuter agent functionality, only to bypass the very security restrictions in the sandbox to allow the functionality we need.

Productivity wins over security when security introduces friction.

Agents are useless if you don’t connect them to anything. Conversely, agents are a risk when you connect them to data or tools. It’s a catch-22. A sandbox can contain some risks, like when an agent generates and executes code. But when that sandbox then requires connections to external data and tools, you create a connection from the “isolated” sandbox to production systems/data and SaaS products

Is it even a sandbox at that point?

Here is what will happen in the next six months. Employees will use agents to increase their productivity. It’s a win for the employee because they can automate their boring and mundane tasks. It’s a win for business because they can finally see the ROI of AI.

Those same employees will connect their agent to every resource imaginable. The ones in your company, external things you didn’t even know existed, everything.

That employee’s agent, the new agentic workforce, will become a single point of failure.

And security teams won’t know about it.

So, what do you actually do about it?

Step 1: Gain visibility: Find the agents operating in your environment. This spans local endpoints, SaaS products, and whatever you built in production.

Step 2: Threat model: Assess every agent. What does it connect to? What permissions does it have? What’s the blast radius when something goes wrong? Imagine an attacker sitting behind the agent, telling it what to do. Are we looking at a risk of data theft or the ability to impact production systems? Both?

Step 3: Get Control: Create a secure baseline for the controls you want to enforce. Do you want to limit destructive commands? Do you want to put more restrictive controls on less technical users? There’s no right answer for this. It’s personal and up to the business and your risk tolerance. I recommend indexing towards user productivity, but not reckless productivity. Users need to be allowed to exceed the speed limit, but they shouldn’t be driving a tractor-trailer loaded with dynamite.

Step 4: Monitor: Keep an eye on what those agents are doing. Stay frosty on where agents are going rogue or taking actions that circumvent the security controls you have in place.

Your workforce is about to double, and half of it won’t be human. The time to get ahead of the wave is now. If your workforce is going agentic, let’s talk.

If you have questions about securing AI, let’s chat.