The concept of a security perimeter gets complicated with agentic systems. It’s far from the concept of fortifying a castle. Instead, it’s an ever-evolving dynamic system. But sitting at the edge of these agentic systems is our new access point into agentic systems. Data.

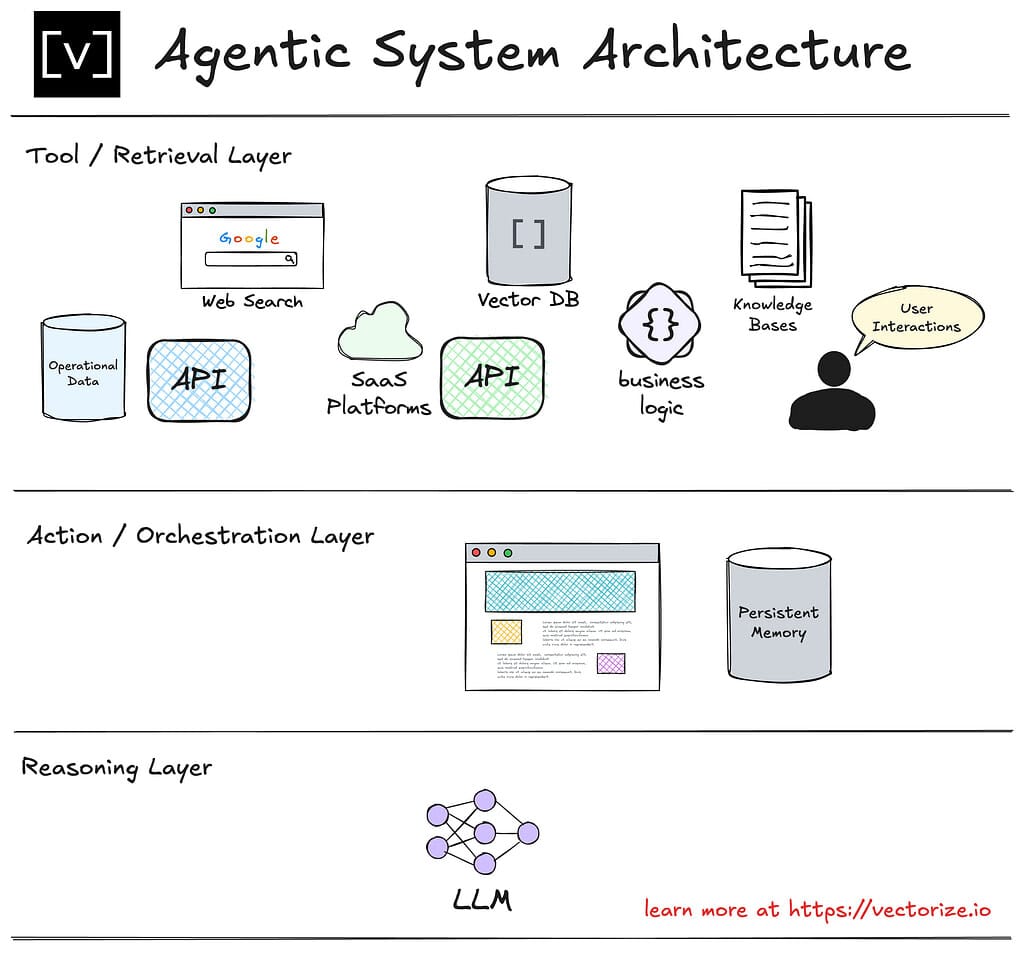

To help understand the new paradigm, let’s look at the components of an agent. Like a delicious cake, this diagram from Vectorize provides a great visual of how to think about agentic systems in layers.

Let’s expand these layers from the bottom up.

Reasoning Layer: This is the language model, the core of agentic systems. It’s the decision maker for agentic processes. It can be one or multiple language models of any size, like a large language model (LLM) or a small language model (SLM). It also happens to be the soft, gooey inner layer of agentic systems. It’s what we blame when an agent goes rogue, either from its own confusion or if it was manipulated through prompt injection.

Orchestration Layer: This is the framework within which agentic systems operate. It’s part internal plumbing, part task master, and part scribe. It’s responsible for managing an agent’s memory, state, reasoning, and planning so that it can accomplish its goal. It’s the connective tissue between all of the agentic components.

Retrieval Layer: This is the outer layer. It’s what gives agents power and knowledge through the data and tools it can access. Data can range from internal document stores to databases. Just think of any of the data you have access to in your organization. Tools can range from a browser to SaaS apps. What’s interesting is that so many tools lead to additional data.

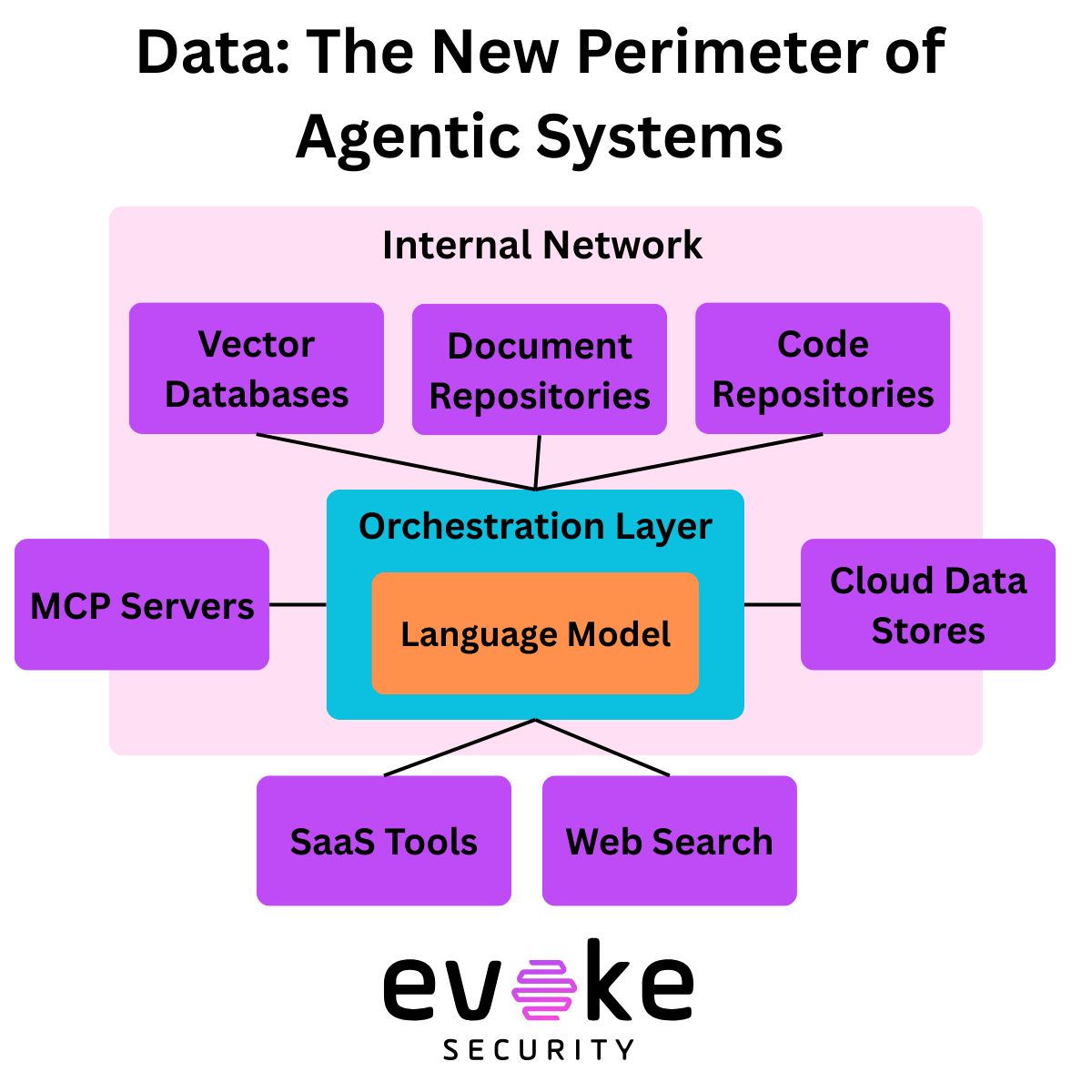

The crux in securing agentic systems is prompt injection. And the challenge with prompt injection, especially as it relates to indirect prompt injection, is data. More importantly, where can untrusted data enter the agentic system and wreak havoc? The blast radius is directly proportional to what the agent has access to, including other agents.

This is why data is the new security perimeter of agentic systems.

To understand your risk, you have to map agentic systems. You can’t fix your risk unless you understand your risk. And you can’t understand your risk until you map your systems. This isn’t so easy when you don’t even have an inventory of where AI is being used in your environment, especially when it comes to MCP servers. A hyper-connected system of interconnected risk is forming right before our eyes, and we can’t even see it!

But what if you could see it? If you’re interested in how to map these systems, you might be the right fit to be an evoke design partner. Let’s chat.

If you have questions about securing AI, let’s chat.