Funny story. When I started this newsletter, some jerk bought the weekendbyte.com domain, so I’ve been sending it from teachmecyber.com. Well, I just realized that I WAS that jerk. Awkward.

I don’t know whether I should be proud of myself for thinking so far ahead or concerned that I completely forgot I bought the domain.

Either way, weekendbyte.com now (finally) links to all of my old newsletters. In the future, I’ll switch emails to send from weekendbyte.com, so if you suddenly stop getting my newsletter, check your spam folder so we can continue to be BFFs 🤗

Okay, enough on my stupid blunder. Today, we’re covering a lot about AI and cyber attacks, including:

Is AI dropping zero-day vulnerabilities?

Nation-states are using ChatGPT in attacks.

Olympic scams are starting.

-Jason

AI Spotlight

AI Enters the Security Researcher Biz

The quickest way to put me on tilt is to say that attackers actively use AI to create new zero-day vulnerabilities. One moment while I let my blood pressure lower…

The short answer is that it isn’t happening…yet...

But a team of nerds is looking to change that. In February 2024, researchers at the University of Illinois released a paper on using LLM agents to hack web applications autonomously. Nvidia developer and nerd Tanay Varshney describes an LLM agent as “a system that can use an LLM to reason through a problem, create a plan to solve the problem, and execute the plan with the help of a set of tools.”

The key to AI agents is their ability to execute tasks, receive information, analyze it, and decide on the best course of action. I believe this will supercharge attackers and defenders in the future.

The researchers trained these hacking AI agents with web hacking documentation that included details on specific web vulnerabilities and how to exploit them. This is the equivalent of giving the LLMs the instruction manual for hacking the website.

Using GPT-4, the researchers found that the LLM agent successfully exploited 73% of the tested vulnerabilities. That's not too shabby until you realize that they tested intentionally vulnerable websites. When tested in the real world against 50 websites, they found only one low-risk vulnerability. It’s cool, but not exactly an improvement over existing tools.

But what about AI finding zero-day vulnerabilities? Well, that research team wasn’t done yet. They are back and touting how teams of LLM agents can exploit “zero-day vulnerabilities.” Sounds fancy, what is it?

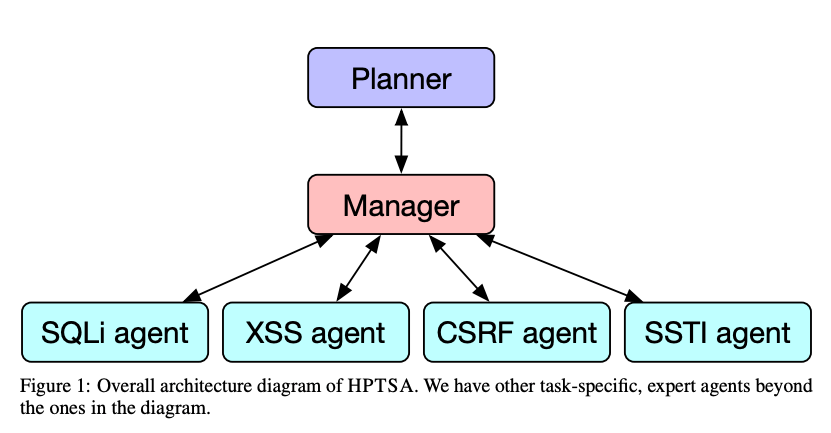

They created an easy-to-remember thing called hierarchical planning and task-specific agents (HPTSA). In short, it creates task-specific agents based on the type of vulnerability that reports to a team manager, who then reports to a planner to coordinate everything. Said differently, they created specialist AI agents. Adam Smith would be proud.

The researchers tested for vulnerabilities in open-source software developed after the knowledge cutoff date of GPT-4. This means that the model wouldn’t have known about the vulnerability.

Their research found that HPTSA found 53% of the vulnerabilities.

Here’s my take on this. They’ve essentially created a crappy and unreliable fuzzing tool, which is something that security researchers use to send a bunch of data to applications and look for ways it breaks things. This is one way of discovering zero-days. And yes, these tools already exist and have been used for decades.

While this research isn’t earth-shattering, it is finding ways to automate what security researchers are already doing. This could make them more effective and increase the velocity of discovering zero-days. However, it would still rely on a very talented and smart human.

Also, they’re scraping the bottom of the vulnerability barrel. Don’t expect this type of technology to go find a zero-day vulnerability in Windows 11 or MacOSX. This tech is the equivalent of a toddler making stick figure drawings. We’ll put it on the fridge, but we’re not dealing with a future Picasso here.

The promise I see is in the development of task-specific agents. There is a lot that can be done with these to automate both cyber attacks and cyber defense. Imagine a world where you have a team of cyber specialists who don’t need to sleep or eat and will react nearly instantaneously to what is happening in your network. It will change how Security Operations Centers (SOCs) and Managed Detection and Response (MDRs) operate.

Security Deep Dive

OpenAI Battles Nation-State Baddies

I closely monitor how attackers use AI in their attacks because I believe it will dictate the future of cyber attacks. So I got giddy this week when OpenAI released a report on how nation-state threats use ChatGPT for covert influence operations.

Let’s examine how different nation-states have used ChatGPT to support their influence operations. The use cases are pretty clear and consistent.

🇷🇺 Russia 🇷🇺

ChatGPT Usage: Setup comment-spamming, content generation, code debugging

Debugged code to automate posting to Telegram

Generate social media comments

Generated content for websites

Translating articles from Russian to English and French

Proofread and corrected French-language articles

Here’s an example of some of the Telegram content Russia created.

ChatGPT-generated content used in a Russian influence campaign

🇮🇷 Iran 🇮🇷

ChatGPT Usage: Content generation

Generate and proofread articles in English and French, headlines, and website tags

🇨🇳 China 🇨🇳

ChatGPT Usage: Content generation, research, code debugging

Generated content in Chinese, English, Japanese, and Korean for blogs and social media

Debug WordPress code

Gain advice on social media analysis

Research of news and current events

Here’s an example of comments generated for X that criticized a Chinese dissident.

ChatGPT-generated content used in a Chinese influence campaign

🇮🇱 Israel 🇮🇱

Note that this is tied to a for-hire commercial company in Israel

ChatGPT Usage: Content generation

Generate and edit content for articles and social media comments

Create fake personas and bios for social media

Here’s an example of comments and an image generated for X that replied to DFRLabs calling out this threat actor for conducting an influence campaign.

ChatGPT-generated content used in an Israeli influence campaign

Let’s talk impact. Open AI found that the usage of ChatGPT did not appear to have any meaningful impact on increased audience engagement. Said differently, while it saved the attackers time and increased their productivity, it didn’t make their campaigns more effective.

Take that comment lightly. It doesn’t mean nation-states won’t become more effective with the technology to foster more engagement. It just means that it saved them more time, which can be used for other nefarious purposes.

I also expect these attackers to continue using ChatGPT to iterate on their campaigns, find what works, and double down on that. We are just leaving the starting line on how attackers will use this tech. A more productive attacker is a more dangerous attacker.

Security News

What Else is Happening?

🇨🇳 The Netherlands National Cyber Security Centre released a report claiming China hacked into over 20K Fortinet firewalls over a few months span. The mass exploitation was likely opportunistic to gain as much access as possible and then figure out what to do with it later. Some of those devices had malware installed that facilitated further access.

🧑⚖ Pro tip. Hiring a hacker is risky. An LA-based attorney learned this the hard way after he tried to hire a hacker to dig up dirt on a rival attorney and judge. Unfortunately for that lawyer, it was actually an undercover FBI agent he was chatting with. Oops…

🏃 With the Olympics just around the corner, scammers are going for gold with websites pushing fake tickets. The awesomely named cyber-gendarmerie has already found 338 fraudulent websites, with 51 already shut down.

📶 Two UK-based individuals were arrested for using a homemade antenna to send smishing (still one of the worst words in security) messages. It’s a novel way to bypass the smishing protections that mobile carriers have, though it has a limited blast radius as it relies on mobile phones connecting to their homemade antenna.

❄ Mandiant wrote a great write-up on the technical details behind attacks targeting Snowflake customer accounts.

🦘 Scammers used Facebook ads to distribute deepfake videos of Australian politicians in an investment scam, which promised guaranteed monthly income for a small upfront investment. While it’s a nice switch from the fake Elon Musk crypto scams, it shows how scammers are not shying away from deepfaking public figures to transform trust into dollars.

🗑 A former employee was sentenced to almost three years in prison after giving the biggest 🖕to a company after getting fired. The 39-year-old deleted 180 virtual servers, causing damages of $678K. How did this happen? The employer never terminated the user’s credentials after letting them go for poor performance.

If you enjoyed this, forward it to a fellow cyber nerd.

If you’re that fellow cyber nerd, subscribe here.

See you next week!