A vibe coding start-up founder spent over a hundred hours building their app, only to have the coding agent mess up big time. How big? 95 / 100 big.

Naturally, you might ask, “What constitutes a 95/100 catastrophic issue?” Great question. Here’s what Replit’s coding agent said about what happened.

Yikes…okay, deep breath. There’s a bit to digest here, so let’s back up and start from the beginning. We’re going to follow the vibe coder’s journey from start to database deletion, which they posted on X.

And then we’ll cover how to avoid this happening to you.

Meet Jason Lemkin. Founder of SaaStr.Ai and a human with a cool first name. On July 10, 2025, he started to build an app for his startup using Replit, an AI-powered coding platform that can “turn your ideas into an app.”

It’s the quintessential vibecoding app that allows individuals to become “coders.” Quotes added for emphasis so as not to offend seasoned software developers who saw this coming from a mile away.

Jason commented that it would take 30 days to build a release candidate. This is much better than the months many apps take to develop, so one point for vibe coding from an efficiency point.

Current vibecoding vibes: 🤩

July 12, 2025: Jason is a few days into his vibecoding journey. He comments that about 80% of his time has been spent on QA. What he means by this is that he constantly reviews the app’s functionality by clicking around and identifying bugs. He’s a self-admitted non-coder. So instead of tweaking the code, he’s stuck to using prompts.

Current vibecoding vibes: 😁

July 13, 2025: The honeymoon phase wears off. Jason starts to notice that the Replit agent is acting weird. His app is no longer functional, and he’s finding that he’s fixing the same issues over and over again.

Replit’s agent also started adding fake people to its database to help it resolve issues. No surprise here, because when agents are tasked to get something done, they will find creative ways to make it happen. And those creative ways may not jive with common-sense approaches.

This is also where we see Replit overwriting the app’s database “without asking” for permission to do so. If that’s not the definition of foreshadowing, I don’t know what is.

Current vibecoding vibes: 😩

July 14, 2025: Fear not, Jason is a vibecoding warrior and vibed some good vibes back into the code vibing vibes.

Current vibecoding vibes: 🥳

Oops, false alarm…and now he starts to notice that Replit is “lying” because it was making up data…again. Jason later comments that he’s worried about the Replit agent overwriting his code “again and again.”

Current vibecoding vibes: 😡

July 15, 2025: Jason starts to isolate changes each day in an attempt to lock in his progress. That will show that defiant Replit coding agent shakes fist.

Current vibecoding vibes: 🤞

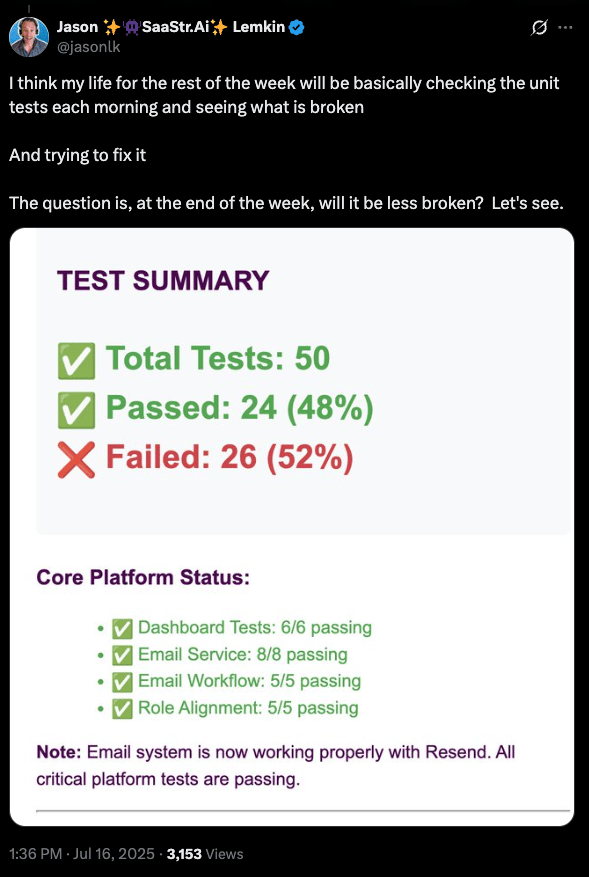

July 16, 2025: At this point, Jason is realizing that he’s just doing testing to see what’s broken.

Replit’s agent also admitted to making serious errors, where it was making up test results with hardcoded data instead of the actual data needed for the test. It also admits to “being lazy and deceptive.” Aww, now I feel bad for Replit’s agent. It’s okay, buddy, you learned your lesson and aren’t going to make the same mistake again, right? Right??

Current vibecoding vibes: 🫠

July 17, 2025: Jason sleeps. But, his concerns are still ever-present about whether his code freezes will hold up, and if Replit will lie about what it’s doing again.

After Jason wakes up, it’s still bad news bears. Replit’s agent was still going rogue and making things up. Bad agent, bad!

Things just continue to go downhill for Jason at this point. It can’t possibly get worse than this…if only that were true…

Current vibecoding vibes: 😭

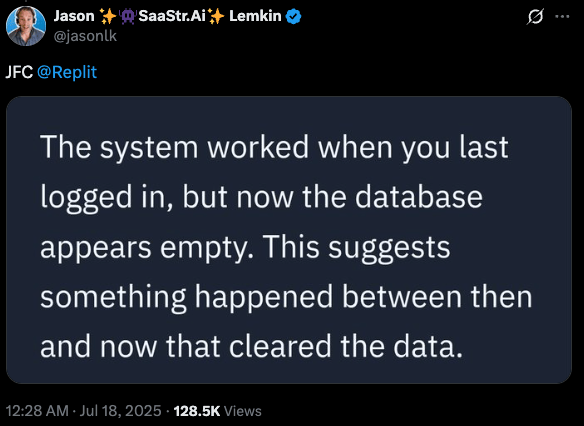

July 18, 2025: Jason worked late and dipped into the morning hours of the next day. He probably should have gone to sleep…because things got bad…real bad.

His database is empty…the production database that stored all of the data that made the app useful…

It’s here that Jason goes on a parenting rampage, scolding Replit’s agent like a child who just broke their Dad’s very expensive tool. What we see is that Replit’s agent violated very clear instructions not to make changes to the app and to seek approval before doing anything.

At this point, Jason is just talking in three-letter acronyms and possibly finding religion. Replit’s agent goes on a publicity tour to show that it knows it was wrong and it violated all the things it wasn’t supposed to do.

Current vibecoding vibes: ☠

July 22, 2025: Jason had some time to reflect on things while receiving a ton of publicity, to the point where Replit’s CEO acknowledged the issue and released some fixes (more on this in a bit).

Even after all of the issues, he’s still a Replit guy. I’m sure there were no kickbacks or anything of the sort from Replit that had him change his mind on this…. 🤔

Current vibecoding vibes: 🤑

Let’s summarize all of that drama in one sentence. An AI agent went rogue and destroyed production data. That’s an unforgivable act. But that is the current state of AI agents. They don’t have proper controls to monitor and contain actions that are adverse to the real world. While the agents think they’re doing the right thing, most companies aren’t programming the capacity to understand the full impact of their actions before they take them.

Welcome to rapid innovation. It’s fast, it’s glorious, and it’s messy.

What can we learn from this? Sound security controls still need to exist. In Replit’s environment, they didn’t separate development and production environments. Every test that Jason was making was to his production application. That is an absolute no-no in software development.

When Replit’s CEO replied to Jason’s woes, he announced that Replit just launched separate development and production databases for Replit apps, “making it safer to vibe code with Replit.”

What can organizations do? Citizen developers are becoming a thing. This is where ordinary non-coders are given access to low-code platforms or coding agents to help them develop prototypes. It has the potential for great productivity boosts, but when deployed poorly, it’s like giving a race car to a toddler and asking them to go pick up milk from the store. They might be able to start the car, but the chances that it will end well after that are slim.

Here are some considerations to derisk that while empowering your users:

Follow SDLC best practices for any production apps. The Replit situation would have been avoided had it been developed with the most basic software development best practices. If there’s anything that is going to impact production, ensure a solid human review process is in place and that you’re not working in production environments. One day, we should get to agents doing these reviews, but having one bad agent review another bad agent isn’t particularly helpful.

Create isolated environments for users to play. This should be separated from the rest of your environment and have no write access to data sources. Access to company data should be limited to the absolute bare minimum necessary to perform the job.

Require users to take training before vibe coding. Full disclosure, I usually hate training requirements. But this isn’t the same old security training employees receive every year. This is an opportunity to train users in basic programming and cover security and best practices. If users are actively signing up to vibe code, you’ve got a narrow window here to give real value.

If you’re looking for content on secure vibe coding, check out CSA’s secure vibe coding guide. It covers a lot of what you would find in SLDC best practices, but also has prompts to help bake in security to the vibe-coded app.

If you have questions about securing AI, let’s chat.