The Weekend Byte is a weekly overview of the most important news and events in cybersecurity and AI, captured and analyzed by Jason Rebholz.

ChatGPT’s new o1-preview model took one hour to do what a PhD student spent their first ten months doing. Here’s the moment he realized it. He tested this by ingesting his paper, which included a detailed methods section on how he solved the problem, into ChatGPT’s new model. Sorry, bro, but don’t worry, you’re still super smart!

Today, we’re covering:

Google’s attempt to combat deepfakes.

Why you don’t record yourself stealing $250M BTC.

What happens to your stolen phone?

-Jason

Together with NordPass

You can barely remember phone numbers today. How do you expect to remember an average of 87 business-related passwords? Do you still use sticky notes or DMs to manage them all? Stop!

Use my code “weekendbyte” for a free 3-month trial or 20% off NordPass Business. With NordPass, no memorization is required for great security. Check it out here.

AI Spotlight

Google Combats Deepfakes

A recent LinkedIn post of mine talked about a new global standards body known as Content Provenance and Authenticity (C2PA). It’s a coalition working to combat deepfakes and the threat of deep doubt, which is “skepticism of real media that stems from the existence of generative AI.”

The new standard is supported by big names, including Adobe, Amazon, BBC, Sony, Intel, Microsoft, OpenAI, and Google.

The goal of the C2PA standard is to certify the provenance of digital content. This allows for a digital record to accompany content everywhere it goes. It tracks things like the origin of the digital content and any modifications made to it. It applies to all digital content, including images, videos, audio, and text. Users can then validate the source and history of that content.

In lieu of a lengthy explanation of how it works, this infographic from Antonio Grasso summarizes it well.

From Antonio Grasso

There are two distinct challenges with any deepfake detection capability.

The ability to detect what’s real and what isn’t. C2PA can help (not solve) this problem by allowing users to verify information on digital content. Of course, this is only effective when the content has C2PA metadata. So, it takes willing participants for this to have an impact at scale.

The deepfake detection must be built into the user’s workflow. I’m willing to bet most people won’t go out of their way to check if something is real.

This is where the heavyweight names can help, and Google is taking a first stab at it. They announced that they’re incorporating the C2PA standards into their products. In the coming months, they’re incorporating C2PA in the following ways:

Search: If an image contains C2PA metadata, the “About this image” feature will show whether it was created or edited with AI tools. This will also include Google DeepMind’s SynthID watermark for any Gemini-created media.

Ads: Google started integrating C2PA metadata into their ads as a precursor to informing how they enforce key policies.

And they’re currently researching ways to incorporate C2PA for YouTube creators.

This sounds great, in theory. But let’s go back to workflow. Finding the “About this image” feature took me an embarrassingly long time. I was clicking around like an idiot for several minutes, finally giving in and googling where it was. Oh, the irony.

It’s great that we will have an option to validate media. Still, it’s so far removed from a typical workflow (e.g., just looking at an image) that I don’t see this making a significant impact outside of the cases where someone REALLY wants to validate the source of an image (assuming that the image also has C2PA metadata attached).

Regardless, I remain optimistic because, while not perfect, it is a step to empower people with more data to make informed decisions, something it seems society is severely lacking these days.

Security Deep Dive

Pro Tip: Don’t Record Your Cyber Crimes

Settle around the fire as we watch the downfall of a young hacker and his friends who stole nearly a quarter of a billion dollars in BTC. The villain of our story is Malone Lame, a 20-year-old kid who jumps between LA and Miami.

There’s one thing you need to know about Malone…

Malone is a douche.

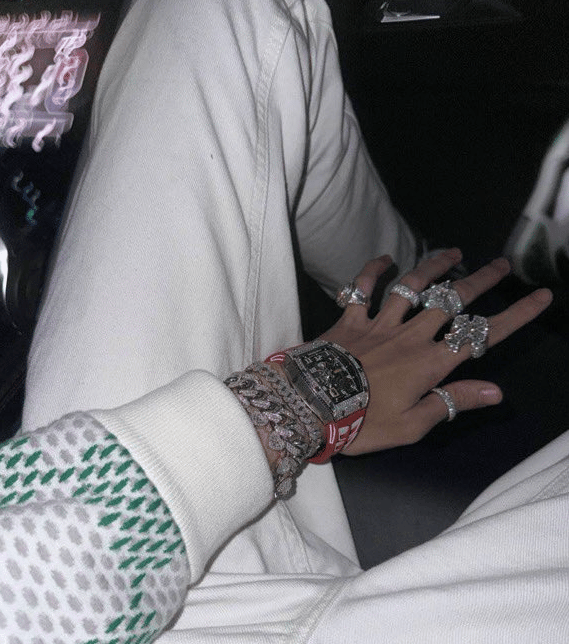

Why? Because he steals crypto and then uses it to buy luxury goods like watches, jewelry, and 10+ cars. See for yourself…

Malone’s Instagram - From ZachXBT Twitter Thread

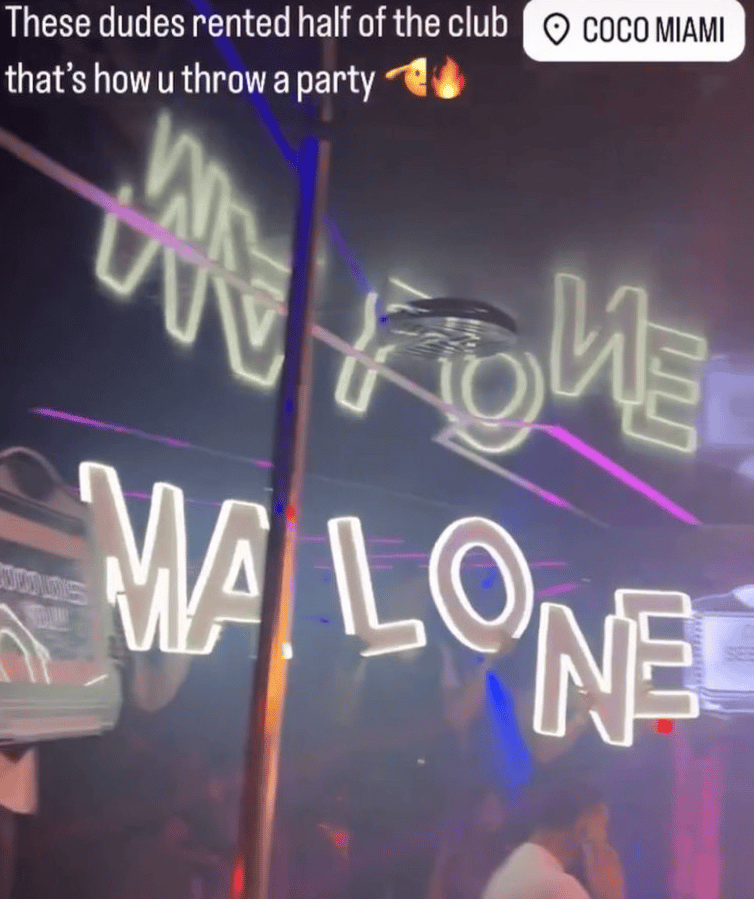

Malone also rents out clubs in Miami to celebrate his stolen crypto…

Malone’s Instagram - From ZachXBT Twitter Thread

And, of course, Malone also believes that buying expensive cars for girls will get them to like him…and he would be wrong. Sorry, champ, maybe next time. But who cares? When you steal millions in crypto, you can find someone else!

Malone’s Instagram - From ZachXBT Twitter Thread

But just as his luck ran out with the ladies, he lost something else…his freedom. That’s because the FBI arrested Malone and his friend, Jeandiel Serrano, a 21-year-old from LA, for stealing loads of crypto from people. The victim of their largest heist was someone based in Washington, D.C., from whom they stole over $230M in BTC. Why someone is sitting around with nearly a quarter of a billion in crypto is a question for another day.

The indictment stated they and others used the stolen cryptocurrency to “purchase international travel, service at nightclubs, numerous luxury automobiles, watches, jewelry, designer handbags, and to pay for rental homes in Los Angeles and Miami.”

Thankfully, these brilliant idiots made investigators’ jobs easy. Not only did they have terrible operational security (OpSec), where they leaked their real names and other sensitive information leading to their identities, but there were also video recordings of their attack.

Oh, and ex-girlfriends leaked their photos on social media, while others tagged them in posts as they were galavanting around Miami. They’re as smooth with covering their tracks as they are with the ladies.

Let’s break down their heist. An awesome Twitter thread showed how these kids pulled it all off. It was a playbook similar to the one I’ve written about, where high school kids were stealing crypto.

It worked like this:

The kids impersonated Google Support and called the victim, gaining access to the victim’s personal accounts through this social engineering exercise.

Using the access to the victim’s accounts, the kids identified all of the victim's crypto accounts, thus enabling more social engineering.

The kids then impersonated the Gemini cryptocurrency exchange support team and called the victim again. They claimed that the victim’s Gemini account was hacked and guided the victim through resetting their MFA and sending Gemini funds to a compromised wallet that the kids owned.

Not stopping there, the kids tricked the victim into using AnyDesk to share their screen. During that screen share, the kids tricked the victim into restoring their wallet, which leaked their private key and supposedly backed up some of the wallet files to a OneDrive account they could access.

With that private key, the attackers could steal the funds from the wallet. All $230M of it.

The kids then began laundering the money and spending it on luxury goods.

During their celebrations, they started to leak information about who they were, like Veer Chetal.

Desktop share - From ZachXBT Twitter Thread

All of this information and more open source intelligence (OSINT) research came together to profile the group of very douchy kids. While two were indicted, the rest are presumably at large and trying to lay low.

Mug shot for Malone Lam - From ZachXBT Twitter Thread

As one Twitter comment said “This is the face of someone who knows they’re not gonna do well in prison.” Bye-bye, Malone.

Security News

What Else is Happening?

🚽 Attackers are using a credential flusher to annoy users into entering their Google credentials. It forces the user’s browser into “kiosk mode,” which launches the browser in full screen and blocks its ability to exit. If the user enters their credentials and saves them to the browser, an accompanying infostealer wipes up those credentials.

✅ Test your deepfake detection skills with this New York Times quiz.

🦮 You have to hand it to attackers. They are creative. To hide the file extension of a malicious file, attackers used braille spaces in the file name to hide it from view of Internet Explorer prompts.

📻 A German radio station learned the hard way that video ransomware killed the radio star station. Attackers encrypted all of the station’s music, forcing the station to broadcast “emergency tape” music, which we can only guess is Rick Astley on repeat.

🪞 Be careful of those Snapchat settings. The “My Selfie” feature lets you create AI-generated images of yourself based on photos you share with it. Those AI-generated images, per settings enabled when you accept the terms, can appear in your personalized ads (thankfully, just your ads). Snapchat said those pictures only go to you and aren’t being shared with third parties…but still…change your settings.

📱 Ever wonder what happens to your phone after it’s stolen? Per Group-IB, one crimeware-as-a-service model supports phone thieves by sending smishing messages to the phone’s victim asking for credentials, which can then be used to unlock a phone, turn off “Lost mode,” or untie the owner’s account from the phone.

If you enjoyed this, forward it to a fellow cyber nerd.

If you’re that fellow cyber nerd, subscribe here.

See you next week, nerd!